Large Language Models (LLMs) and Chatbots: History, Definitions, and Popular Models

A key challenge in an era of rapidly growing data volumes is their efficient analysis and interpretation. Modern technologies like large language models are changing how companies utilize information. These models power tools like ChatGPT and Bard, enabling them to understand complex messages and generate responses naturally and almost imperceptibly to the user. Below, we look at the potential of large language models and their practical applications in streamlining business processes.

History of Large Language Models (LLMs)

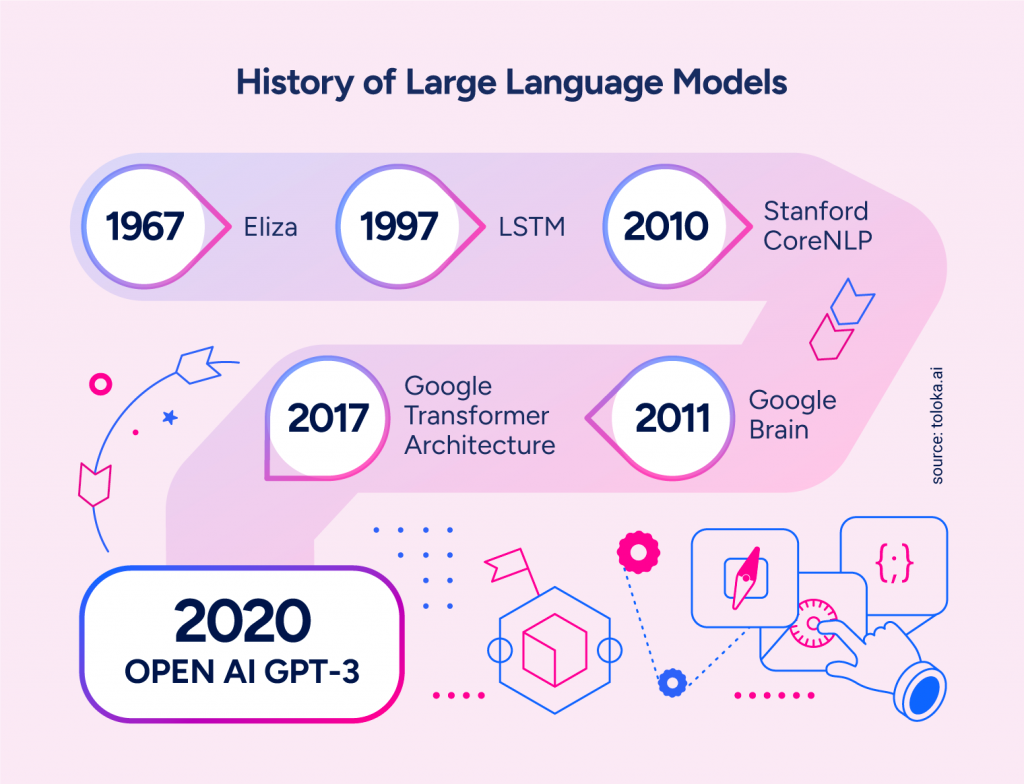

Although chatbots and natural language processing are most commonly associated with the release of ChatGPT by OpenAI in 2022, the history of these technologies and the journey they had to undergo to reach the mainstream dates back much further than it might initially seem.

Large Language Models (LLMs) are a type of computational model that, through training on vast datasets, has gained the ability to process, understand, and mimic human language. Their origins trace back to the 1950s when scientists from IBM and Georgetown University collaborated on a system capable of translating text from Russian to English, marking the beginning of research into the development of LLMs.

Initially, models were trained primarily for simple tasks, such as predicting the next word in a sentence. Over time, however, it became clear that they could gather and utilize vast amounts of data and perform more complex commands by analysing the structure and meaning of words.

Of course, the term “large” is not accidental. It refers to the enormous size of the architecture, which is based on neural networks and many parameters.

Before they reached their current scale, scientists worked for over half a century on smaller models such as Eliza, the first chatbot, and LSTM (Long Short-Term Memory).

The Evolution of Large Language Models and Their Role in AI Development

Since 2018, researchers have been building increasingly advanced tools. A particularly significant milestone was Google’s introduction of the BART model. BART was a bidirectional model with 340 million parameters. Thanks to the wide range of data on which it conducted “self-supervised” learning, it could understand the relationships between words. Also, it was behind every English query made through the Google search engine.

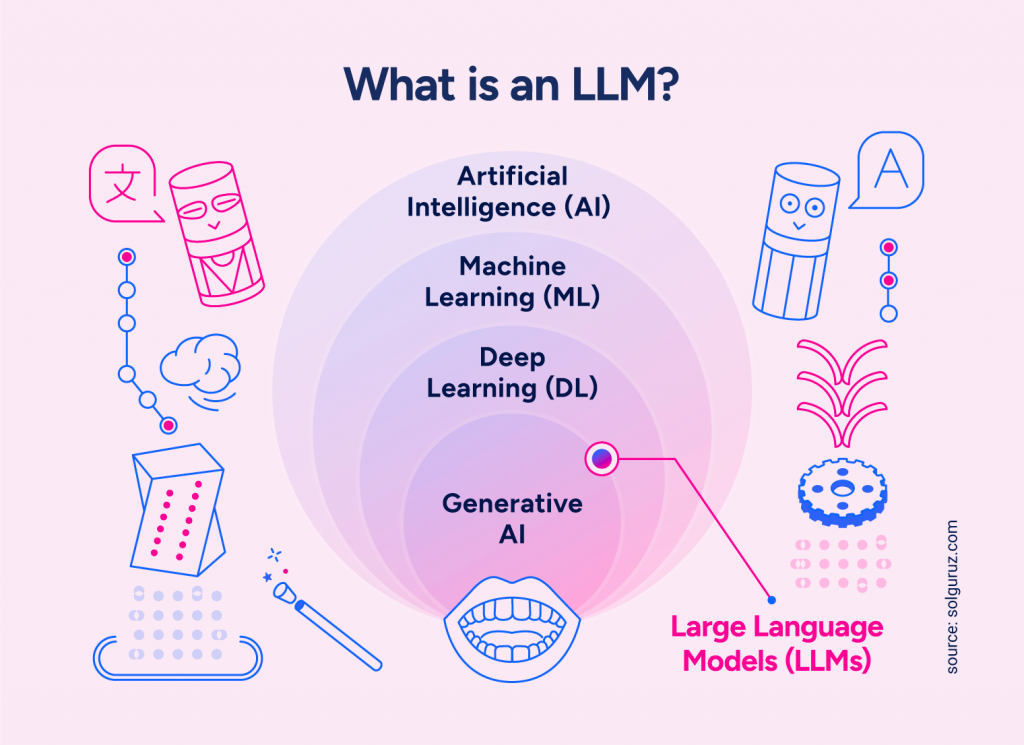

The dynamic development of artificial intelligence opens up new possibilities. However, it also raises questions about the differences between terms such as large language models (LLMs), generative artificial intelligence (Generative AI), and machine learning. We have discussed these topics in detail in one of our previous articles. Still, it is worth emphasizing that LLMs are a specific model type that combines generative AI features with deep learning techniques. Their primary area of operation remains text processing and generation.

Popular Large Language Models

Large language models (LLMs) are a rapidly evolving field. It is difficult to keep up with the latest developments, let alone test each one. Discussing all available models would be highly challenging. We have focused on the most commonly used ones with the most significant impact on the modern market.

GPT 3.5/4.0/4.o

It is hard to believe that only three years have passed between the release of version 3.5 and 4.o. The capabilities and popularity of this model from OpenAI, the market pioneer, have significantly evolved.

After ChatGPT was made available to the public in October 2022, the dynamic development of LLM applications in business began.

ChatGPT maintains its position as the market leader, distinguished by the first commercial implementation of tools. Including those from tech giants such as Apple Intelligence and Microsoft Copilot.

LLaMA

LLaMA (Large Language Model Meta AI) is a family of language models developed by Meta (formerly Facebook).

- Availability: Initially available only to researchers and developers. Now, it is more widely accessible, especially in the LLaMA 2 version.

- Sizes: Models vary in size, from versions with fewer parameters (7B, 13B) to large models such as 65B (65 billion parameters).

- Performance: Smaller models are optimized for lower computational resource consumption, making them attractive for applications running on local hardware. Larger versions, on the other hand, can compete with the most significant models in the industry in terms of response quality and context understanding.

- Applications: Used for various tasks, such as natural language processing (NLP), text analysis, and conversational AI.

Mistral

Mistral is an advanced language model developed by Mistral AI that is distinguished by its high precision and efficiency.

- Comparison to LLaMA: Although similar in size (number of parameters) to LLaMA, it surpasses it in many tests and applications, particularly in the precision of generated responses and the ability to follow instructions.

- Optimization: The model is optimized for both performance and computational power, making it more efficient in practice.

- Applications: Ideal for high-precision applications, such as advanced text analysis, content creation, and more demanding AI tasks.

Claude

Claude is a model developed by Anthropic, emphasizing safety compliance and ethical use.

- Features: Capable of generating detailed and elaborate responses. It can understand complex instructions and context provided as a “system message” (initial instruction). It understands humour, subtle details, and linguistic nuances, making it more natural in conversation.

- Safety: Designed to avoid potentially harmful responses, a result of Anthropic’s focus on “AI alignment” (aligning AI with human values).

- Applications: Used in contexts requiring empathy, understanding of humour, or complex analysis.

Gemini

Gemini is a flagship language model developed by Google DeepMind, previously known as Bard.

- Multimodality: It can accept input in text, images, audio, and video, making it one of the most versatile models on the market. This feature allows it to solve tasks requiring the interpretation of data in various formats, such as image analysis, speech processing, or multimedia content generation.

- Development: Gemini is part of Google’s advanced ecosystem and leverages DeepMind’s resources, enabling continuous improvement of its capabilities.

- Applications: Used in various applications, from interactive assistants to multimodal analysis in industry and science.

IBM watsonX Assistant

Although IBM watsonX Assistant is not directly a large language model (LLM). It uses NLP (Natural Language Processing) and AI technologies that may include large language models within its architecture. watsonX Assistant is a tool for building intelligent chatbots and virtual assistants optimized for business applications such as customer service and process automation.

- LLM Integration: WatsonX Assistant can utilize large language models (e.g., generative AI) in its solutions, especially since the introduction of the WatsonX platform, which supports the development and deployment of AI models.

- Advantages: WatsonX Assistant relies on pre-trained models and allows for the training and personalization of AI based on specific business data. Thanks to its security, predictability, and ease of management, it excels in enterprise-class applications.

Benefits of Implementing LLM-Based Solutions for Businesses

Process Automation and Time Savings

One of the key applications of large language models (LLMs) is the automation of repetitive and time-consuming processes, allowing companies to save significant resources. It is worth viewing LLMs as tools replacing humans and as support that complements their work and expands possibilities.

Models relieve teams of routine tasks and do so in a way that continuously evolves. LLMs adapt to changing needs and contexts thanks to their ability to learn from every interaction.

It is not just about speed—though that is impressive—but also about quality. A chatbot using large language models is not limited to schematic responses but also understands the context of the conversation, making users feel real support.

These systems can process vast amounts of data in seconds, which, in the case of analysis or reporting, gives companies time savings and an invaluable informational advantage in today’s world.

Personalization at Scale

The ability to save time on automated tasks is of great importance, and it is worth allocating it to, for example, personalization. Recommendation systems based on the latest technologies can offer customers products, services, or content tailored to their preferences. This increases customer engagement and satisfaction with service. Implementing large language models allows companies to understand their customers better, which leads to building long-term loyalty and potentially increasing revenue.

Improving Customer Experiences

LLM implementations not only streamline customer experiences but also improve them. Models can provide precise responses to user queries in real time, reducing the time needed to resolve issues.

Additionally, thanks to advanced natural language analysis, large language models can detect user intent, understand the context of the conversation, and tailor responses to resemble human interactions as closely as possible. These features ensure that users experience individualized attention, which improves overall customer experience and positively impacts the company’s market image.

Continuous Learning and Improvement

Large language models have a unique ability to learn and adapt continuously. By analyzing user interactions, LLMs constantly improve their responses, adapting to changing customer expectations and market trends. This is particularly important in dynamic industries where user needs can change from day to day or even hour to hour. Companies using these solutions gain a tool that becomes increasingly effective. The continuous refinement of algorithms contributes to better service quality and greater precision in delivering value to customers.

Example of Large Language Models in Action – Actionbot

Actionbot, as a modern solution for process automation, can combine the advantages of the IBM WatsonX Assistant engine and the GPT 3.5/4 model. Such an innovative combination allows it to optimize responses and expand the scope of understanding. Our virtual AI assistant can tailor responses to customer needs. When responses must rely solely on internal knowledge sources, we use IBM watsonX Assistant. For questions requiring greater creativity, Actionbot leverages the capabilities of GPT 3.5/4.0 models, generating naturally sounding, non-standard responses.

To streamline its operation and deliver the best user experiences, Actionbot automatically switches AI engines depending on the query submitted.

Moreover, Actionbot can continuously expand its knowledge base through provided information. All without manual input, which would not be possible without the combination of both tools. Our product allows for introducing new standards in customer service and data work to ensure the highest quality of service and maximum user satisfaction.

Implementation Using GPT 3.5/4 – AI Fashion Assistant for Lancerto

One example of utilizing the combination of IBM WatsonX and GPT 3.5/4 is ARI. A virtual assistant we created based on our Actionbot for Lancerto, a brand specializing in premium elegant clothing. The virtual assistant ARI was designed to meet the expectations of e-commerce customers who value quick and specific responses 24/7.

Our solution met a significant challenge. ARI had to deliver tailored recommendations, guide customers through the purchasing journey, and provide product information. Our solution comprehensively improved customer service, making it available to users anytime.

For more information about our solution, check out our case study.

What Does the Future Hold for LLMs?

Everything indicates that LLMs will continue to evolve, not only with human assistance but also independently—most of them are being prepared for individual learning and expanding their capabilities by asking and answering their questions. This will allow for better verification of their responses and may reduce models’ tendency to “hallucinate.”

Additionally, their development and increasing ability to specialize in specific tasks offer hope for more qualified models that will not only be capable of drawing basic conclusions but also conducting advanced analyses.

Don’t fall behind and prepare your company for the future. Contact us.